Secrets to a Dynamite Public Sector Analytics Program

Uncovering meaningful analytics from months or years of Web metrics is daunting, at best. So how do you make great Web improvements using metrics?

Whether you’re just getting started in Web analytics or you want to take your program to the next level, you should focus on accurate data, customer service, and concrete goals, said Sam Bronson, Web Analytics Program Manager at the Environmental Protection Agency (EPA), in a Sept. 18 webinar sponsored by DigitalGov University.

Set Goals – and Hold Yourself Accountable

To be meaningful, metrics should be connected to goals, Bronson emphasized.

“No matter what the project or effort is, you need to define the goal,” he said. “Keep people accountable. If they come to you saying, ‘These are the metrics we think are important for our project,’ always counter with, ‘Why are those metrics important? What are you trying to accomplish? What is the actual goal?’ Whatever it takes to get them to define the goal, don’t let up until you have that goal.”

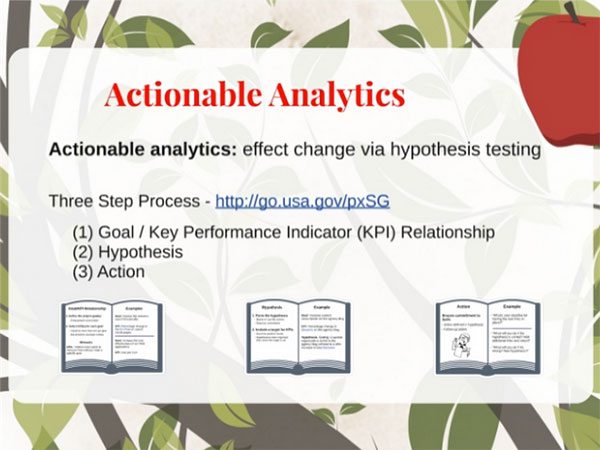

With baseline metrics and concrete goals, you can identify key performance indicators (KPIs) for your site or specific projects. Don’t stop at setting KPIs, though. You should also identify expected results of actions stemming from the KPI as well as follow-up actions based on the hypothesis.

Get the Data Right

Web analytics is about much more than numbers, but accurate data is a must. That may seem to go without saying, but it’s surprisingly easy to miss critical steps that ensure you’re starting with quality metrics, Bronson said.

His number-one tip in this area: Make sure your analytics tool tracks visits across all of your agency’s websites, including sub-domains (for example, “site.agency.gov”) and “vanity URLs” named for a program.

For example, if someone goes to the EPA homepage, types a term in the search box, and clicks on www.energystar.gov from the search results list, that activity should be captured as one session, not three, even though each of those pages belongs to a different sub-domain of the EPA site. Out of the box, many analytics tools would treat these as entirely separate websites, with misleading results. So it’s important to get the tool pointed at the correct data before you start analyzing.

“It’s a crucial step,” Bronson said. “It’s the difference between accurate metrics and inflated metrics.”

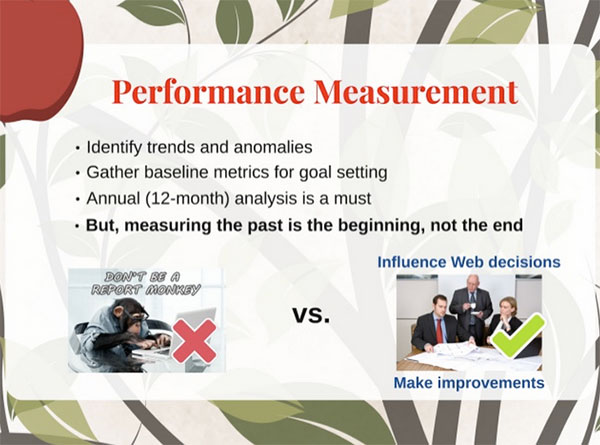

Don’t Be a ‘Report Monkey’

Once you’ve got the data right, it doesn’t mean your only job is to churn out numbers month after month. Your customers—the managers and staff in your agency, and ultimately, the people who use your website—are best served by analysis and recommendations that go beyond straight reporting.

“You don’t want to become a report monkey,” Bronson said. “What you really want to be doing is influencing Web decisions. You want to make those improvements. So performance measurement, while important, is not the end of the road.”

He recommended cultivating a user community beyond the analytics program. That can include maintaining an active (but not overwhelming) listserv; creating an internal blog on analytics, which can become an informal training tool in its own right; making reference pages, training videos, and reports readily available; and encouraging “evangelists” to spread the word about your program.

In the end, it’s important to include goals for the Web analytics program itself, and to hold yourself accountable for them in your performance review, Bronson added.

“You want to make sure that you’re not becoming stagnant and getting in the routine of just pumping out the reports and the templates that you’re used to or that people are requesting,” he said. “You may be the only one … looking out for your program, so you know the power that it can provide.”Hannah Gladfelter Rubin is an Information Research Specialist with the Congressional Research Service at the Library of Congress. The views expressed herein are those of the author and are not presented as those of the Congressional Research Service or the Library of Congress.